TL;DR

The internet is exploding about chatGPT. Can it be industrially used for customer service and knowledge management? Or should we stick with companies like Zendesk, Freshdesk, KorraAI, Document360 and the like?

Advantages:

- Appears super intelligent! And very well understood

- No need to browse through many search results: can summarize many answers into one

Disadvantages

- Not reliable for non-popular, or too recent subject matters

- Can’t browse through search results and get to the original document

- Limited and costly customization

A.m.a.z.i.n.g

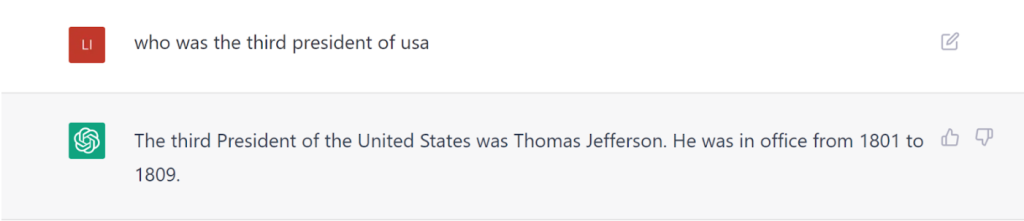

The internet is exploding about the new chatGPT, the new AI-powered chat bot from OpenAi. It can yack with you, code for you, and best of all: it answers questions really well. Ask it anything known to man, and it will reply

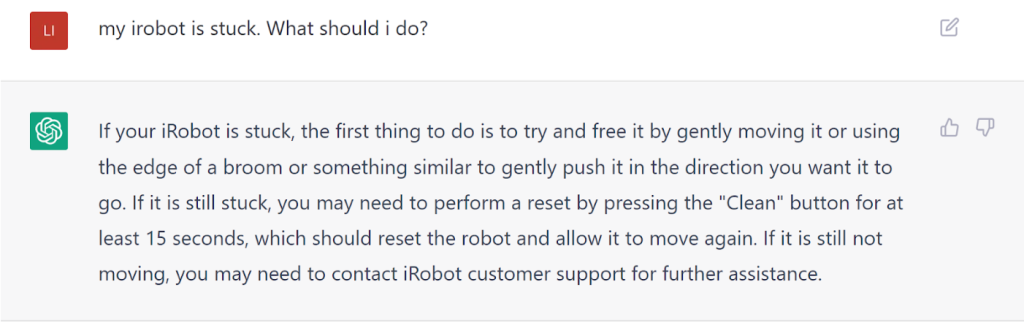

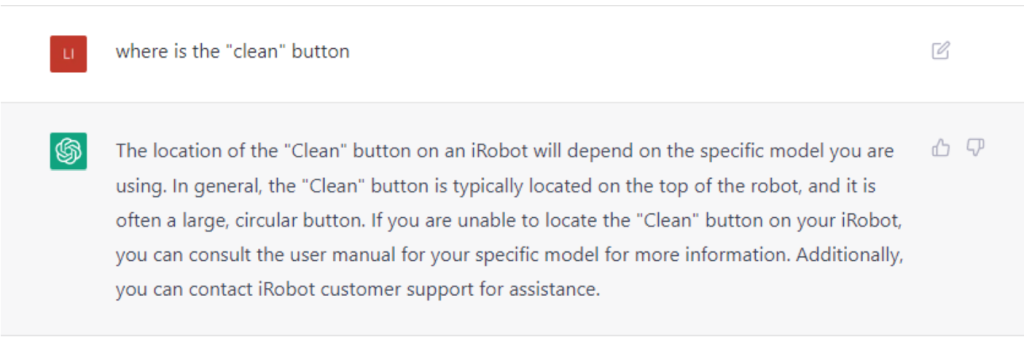

But what’s more important for businesses, is its ability to answer questions about product use:

It has been a long time coming: sales departments, customer success execs and knowledge managers have all been waiting for such a development for years now: a virtual customer service representative that can truly answer any question. Not only answer, but have a conversation with the customer about it. Meaning, answer follow up questions that rely on information provided in the previous steps:

It’s even better than Google: users don’t need to scan lists of search results. The bot chooses the best answer and summarizes it for them.

A. m. a. z. i. n. g.

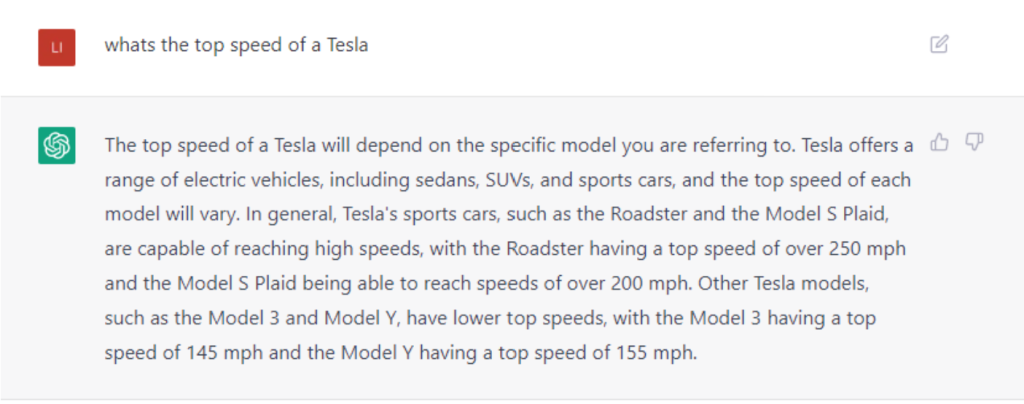

So now, the question is how to put it to use. Let’s think in practical terms: say we are working for an electric vehicle manufacturer. We’d like to have a chatGPT-based representative that can answer questions prospects has – this seems like a no brainer:

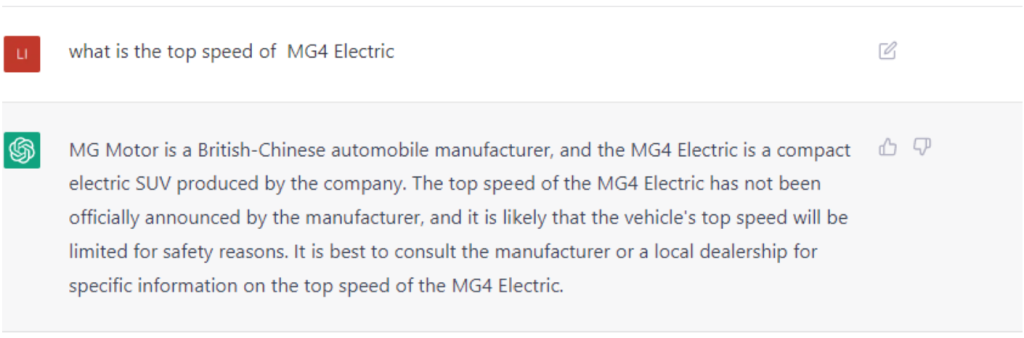

So, this is a Tesla. But what if the EV has only come out this year, 2022? The bot has knowledge only for until 2021.

Well, it was announced on Jun 22, 2022: “The MG4 Electric accelerates from 0 to 100 km/h in less than 8 seconds, while the top speed is set at 160 km/h.”

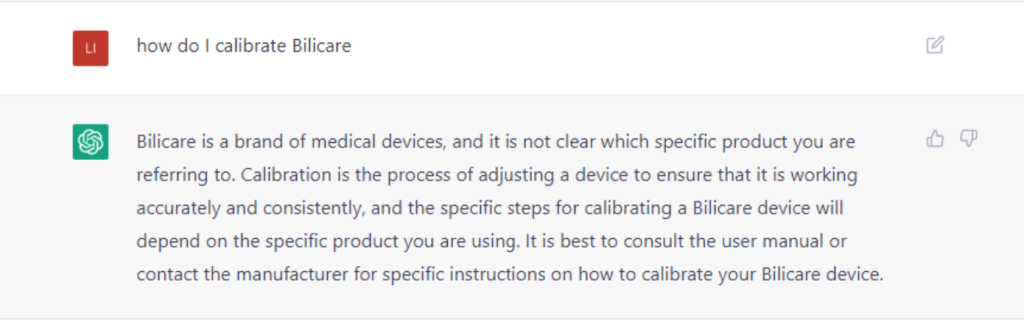

Other problems might occur when it’s a more technical device, with a small user base?

As chatGPT said, it is a brand of medical devices, so the answer makes perfect sense. But apart from that, it is of no use ,neither to users nor to knowledge managers.

Customization

So, all in all, we see that we need to have a way to teach chatGPT new information. How do we do this?

If you are using a search engine or a knowledge base like Zendesk, Freshdesk, Korra or Google Drive – you know how easy it is to feed new documents and videos to the engine: just drag and drop it, or provide a Dropbox or a YouTube link. Can one do the same to GPT-type models?

OpenAI, the company behind chatGPT, has created the Large Language Models GPT1,GPT2 and then GPT3. These language models are forming the foundation to chatGPT. They even nick-named chatGPT as “GPT 3.5”. While we still don’t have documentation for chatGPT APIs, we can learn a lot by looking at GPT 3 and the ways it can be used.

These models are pretty similar in use: you ask it a question, and it will generate an answer based on the knowledge that is stored inside. And the question is: can we add knowledge to it?

The answer is yes! but unfortunately, there are many limitations.

There are two main ways that GPT3 can be used as a base for customer service, support, or knowledge base management.

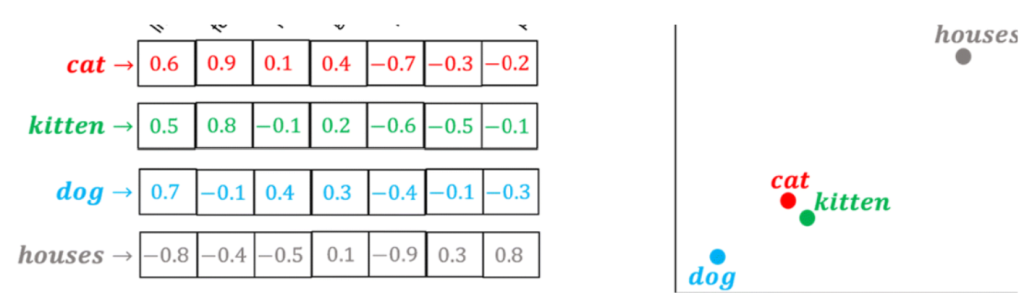

One is called embeddings. Embeddings are the underlying mechanism of AI-based search. In the old Elastic-based knowledge world, sentences were broken into keywords, and stored in a dictionary called ‘inverse index’. A question would then be matched against this dictionary based on a smart formula called TF-IDF, which measures the frequency of a keyword inside a document or a web-page, and how special this keyword is.

This kind of keyword-based search technology is no longer good enough. Modern AI-based search engines take advantage of language models like BERT, GPT3 and others, by taking each sentence in the document, feeding it into the model and receiving a vector out. This vector – a large list of numbers called embedding – is then stored in a database. When a question comes in, the engine creates an embedding for it too, and the engine finds the closest embedding from the database. Miraculously, the geometric closest embedding is also the one that is the most meaningful to the question. For single words, it looks something like this:

So GPT3 has an API for feeding documents into it. The limitation is that the documents has to be short – 2-3 pages in length and not more, and besides, API spells development. There’s no application and UI that can be used to upload documents, search, present them etc.

Secondly, GPT3 can be fine-tuned. This is useful for exactly those cases where the subject matter at hand is special: a medical device, a unique technological area, etc. But the way to do it is rather painful: knowledge managers should collect question-answer pairs and feed it to the beast. Again the problem is, this is a very long and involved process. One has to know all the

questions, select answers, and do all that among mountains of materials every product has – in Tesla we are talking about 250 hours of reading materials, 66 videos – oh, and what about videos? Videos are not included, too. And again, all that requires a pretty big effort of development.

Advantages and disadvantages

To summarize, when we talk about usage of chatGPT – or GPT in general, for customer service tasks and knowledge bases we see the following:

Advantages:

- Appears super intelligent! And very well understood

- No need to browse through many search results: can summarize many answers into one

Disadvantages

- Not reliable for non-popular, or too recent subject matters

- Inability to browse through search results – can lead to errors

- Limited and costly customization